Development of the PEA-PODS (Perceptions of the Environment and Patterns of Diet at School) Survey for Students

ORIGINAL RESEARCH — Volume 15 — June 28, 2018

Hannah G. Lane, PhD, MPH1; Rebecca Driessen, BS2; Katherine Campbell, MPH1; Rachel Deitch, MS1; Lindsey Turner, PhD3; Elizabeth A. Parker, PhD, RD4; Erin R. Hager, PhD1 (View author affiliations)

Suggested citation for this article: Lane HG, Driessen R, Campbell K, Deitch R, Turner L, Parker EA, et al. Development of the PEA-PODS (Perceptions of the Environment and Patterns of Diet at School) Survey for Students. Prev Chronic Dis 2018;15:170561. DOI: http://dx.doi.org/10.5888/pcd15.170561.

PEER REVIEWED

Abstract

Introduction

Few instruments assess key outcomes of school-based obesity interventions, including student perceptions of school environments and school-specific dietary intake patterns. This study describes development of PEA-PODS (Perceptions of the Environment and Patterns of Diet at School), a 2-part survey to measure these outcomes.

Methods

Part 1 (PEA) assessed student perceptions of policies, physical environment, and practices related to healthy eating and physical activity at school. Part 2 (PODS) assessed usual intake (ie, frequency, location obtained, and foods consumed) of breakfast and lunch. Foods consumed were presented by MyPlate categories (eg, Fruits, Grains). Students in grades 3, 6, and 9 participated in 2 phases: cognitive pre-testing (n = 10) and reliability/validation testing (n = 58). Both surveys were administered 1 week apart to assess test-retest reliability and 5-day food records validated PODS. Analyses included percent agreement (70% = acceptable), Pearson correlations, and Cronbach α’s.

Results

Cognitive pre-testing provided feedback on content, length, and age-appropriateness. Percent agreements were acceptable for test-retest reliability of PEA (71%–96%). The final version included 34 items with Likert-type responses in 4 subscales (α ≥0.78). For PODS, agreement for breakfast and lunch location was ≥75% for both reliability and validation. For foods consumed at breakfast, reliability agreement ranged from 74% to 93%, and validation agreement from 68% to 91%. For foods consumed at lunch, agreement ranges were 76% to 95% and 73% to 88%, respectively.

Conclusion

Both parts of the instrument demonstrate acceptable reliability, and PODS demonstrates acceptable validity. This demonstrates appropriateness for assessing perceptions of the environment and usual dietary intake patterns for school-based obesity prevention initiatives.

Introduction

Schools are a crucial setting for childhood obesity prevention efforts (1,2). Children spend much of their day at school, and schools strongly influence attitudes and behaviors related to healthy eating and physical activity (PA) (3,4). Federally mandated, district-level Local Wellness Policies (LWPs) guide nutrition and PA policies and practices in schools to enable these behaviors (5,6). The degree of LWP implementation in schools affects their impact; thus, it is important to evaluate both school-level implementation and student-level impact (7,8).

LWP implementation and schools’ policies and practices for healthy eating and PA are commonly assessed through single-reporter administrator surveys and interviews (7,9). This method introduces a high likelihood of bias and does not capture the students’ perceptions, which recent qualitative studies suggest are as meaningful as the policies and practices themselves for improving behavioral and weight outcomes (10–13). Brief, psychometrically sound measures are needed to quantify student perceptions and understand these outcomes.

In addition to understanding student perceptions, instruments are needed to assess the impact of LWP implementation on student behaviors. Objective assessment of school-day PA (eg, via accelerometry) and weight status is possible; however, no such objective measure exists for diet. Although several validated self-report questionnaires assess diet behaviors in schools, they focus on specific food groups (eg, fruits, vegetables, and beverages) or assess total energy intake and macronutrients (14). The limitations of such measures for children and adolescents are well described in the literature, including a limited ability to recall general patterns within a specific timeframe (14,15). To determine how LWPs influence students’ dietary behaviors during school, an easy-to-understand tool is needed to assess usual dietary patterns, rather than intake volume, across common food categories.

This article describes development of PEA-PODS (Perceptions of the Environment and Patterns of Diet at School). PEA assesses students’ perceptions of policies, practices, and environment for healthy eating and PA, and PODS assesses students’ usual diet at school, including frequency, location, and type of food consumed. Test-retest reliability of PEA-PODS and validity testing of PODS are reported.

Methods

Survey development was an iterative, 3-stage process: development, cognitive pretesting, and reliability/validation. PEA-PODS was developed by a team of researchers with expertise in nutrition and school wellness, and evaluated among students in grades 3, 6, and 9. Study procedures were approved by the University of Maryland, Baltimore institutional review board.

Stage 1: Development

Section 1 (PEA)

We generated a question bank based on LWP requirements and instruments used in previous school-based studies (5,7,9). The question bank items asked students about policies and practices in their overall school, classrooms, cafeteria, and at recess. Items consisted of 5-option Likert-type response sets (“never” to “always” or “totally disagree” to “totally agree”).

No gold standards exist for constructs of student perceptions; however, face validity was established by distributing the initial question bank to a nationwide panel of 10 school wellness researchers who provided feedback and contributed additional items. The final question bank consisted of 47 items (Table 1).

Section 2 (PODS)

To evaluate “usual” diet patterns during the school week, PODS asked students the frequency with which they usually ate breakfast and lunch acquired from various locations (eg, home, school cafeteria, school but not cafeteria, restaurant, or before-school program) during a usual school week, and inquired about the types of foods consumed within each MyPlate category (Fruits, Vegetables, Protein, Grains, and Dairy) (16). As MyPlate is taught widely in schools, it was perceived to be recognizable to and easily understood by most schoolchildren. PODS defined each category by using MyPlate definitions, and included an example of how to break down common foods (eg, slice of pepperoni pizza) into categories. Students were asked 2 questions about each category at each location they selected: how often the category was consumed using a 5-option Likert response set (never to always) and the type of foods consumed from a list of common items in each category (eg, toast, bread, or bagel). Example questions are listed in Table 2.

Stage 2: Cognitive Pre-testing

A cognitive pre-testing process with a convenience sample of 4 students each in grades 3 and 6 and 3 students in grade 9 attending 6 schools (n = 11; 73% male) assessed feasibility and age-appropriateness of PEA-PODS. Caregivers provided written informed consent. Students provided written assent and received a $10 gift card. Students took notes as they completed the survey, then gave feedback to the research team, in groups by grade.

For PEA, this feedback clarified Likert scales (the “agree” scale was confusing for some questions) and wording of several questions (clarification when students have multiple teachers).

For PODS, the feedback led to minor changes to food items within MyPlate categories, some altered wording, a second example of breaking down meals, more detailed instructions, and a “read aloud” feature. Additionally, 3 non-MyPlate categories were added based on participant feedback: Sweet/Salty Snacks, Sauces/Spreads, and Beverages. Following pre-testing, PODS consisted of a range of 10–175 questions, depending on branching patterns.

Stage 3: Validity/Reliability study

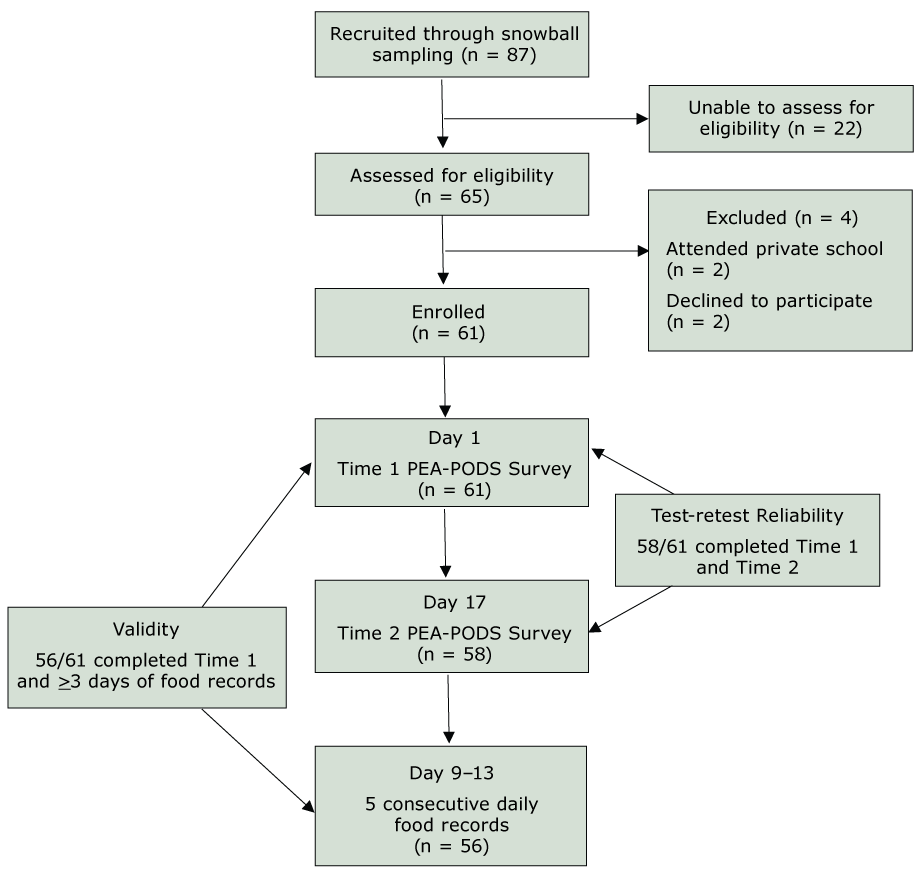

A separate cohort of students in grades 3, 6, and 9 was recruited to assess test-retest reliability of PEA-PODS and validity of PODS (Figure). To detect a minimum correlation of 0.36 with 80% power, our target sample was 60 students. We chose to enroll a broad age range, selecting 20 students each from grades 3, 6, and 9. Students were recruited through snowball sampling, including emails on university listservs, social media postings in parent groups, and advertisements through partners at various institutions across the state. Students who attended public school and could complete online surveys independently were eligible. Parents and guardians were mailed study packets and signed informed consent. Student participants signed assent and received a $50 gift card.

Figure.

Validation study recruitment, enrollment, and study timeline [A text version of this figure is also available.].

Validation study recruitment, enrollment, and study timeline [A text version of this figure is also available.].

Abbreviations: PEA, Perceptions of the Environment; PODS, Patterns of Diet at School.

PEA-PODS was administered electronically by using Qualtrics Version 2017.11 (Provo, UT) on 2 occasions, 1 week apart (Time 1 and Time 2; Figure). The study took place across a period of 2 usual school weeks (without a designated holiday, teacher workday, or early dismissal). To assess test-retest reliability of PEA-PODS, students were emailed survey links on a weekend day, and asked to think about a “usual” school week in the current school year. To assess validity of PODS, participants completed a daily food record on a smartphone by using MovisensXS App Version 1.1. (Karlsruhe, Germany) after Time 2. The daily record is a commonly used method to validate school-based dietary instruments (14). Students were given a basic Android phone with the application pre-loaded and all other features disabled, and were instructed to fill out the food record once daily between 3:00 pm and 11:59 pm for 5 consecutive school days. The app used wording similar to the PODS survey to assess how often students ate breakfast and lunch, where they acquired each, and what type of foods they consumed.

Data Analysis

Descriptive data were collected from participants during the consenting process. All analyses were conducted in SPSS V.22 (IBM Corp).

PEA

Test-Retest Reliability. Item-by-item percent agreement was used to examine reliability given a tolerance of +/− 1 (eg, if student answered “Always” at Time 1 and “Most days” at Time 2, responses were considered in agreement) to account for potential minor temporal instability (17). Agreement values between 70% and 79% were considered acceptable, 80% to 89% good, and ≥90% excellent (14,18). Items with poor agreement (<60%) or redundancy within subscales were removed or reworded to enhance clarity, and relevant, theoretical subscales were generated from remaining items. Sum scores were calculated for each subscale at Time 1 and Time 2, and Pearson correlations assessed scale test-retest reliability. Internal consistency of the overall scales and subscales was described by using Cronbach α’s.

PODS

Test-Retest reliability. Percent agreements assessed test-retest reliability of PODS. For location, agreement was examined for where students reported getting breakfast and lunch during the school week. For frequency, agreement was examined for how often students reported eating breakfast and lunch across locations by using a tolerance of +/− 1, as described above.

For consumption, agreement was examined in 2 ways. First, it was examined for consumption of any food within each MyPlate category (a dichotomous variable was created where 1 represented consumption of any items within that category across locations). Second, a continuous “healthy” composite score was created by using the Dietary Guidelines for Americans to assess whether overall eating patterns could be detected reliably (19). Each food item (eg, toast) was scored separately on a scale of 1–4 (very unhealthy to very healthy) by 3 authors with advanced nutrition degrees, who met to reach consensus (19). To prevent overinflation based on quantity of items selected, mean scores for each MyPlate category were summed to a maximum score of 32 (8 categories × 4 possible points per category). When participants reported getting breakfast, lunch, or both from 2 or more locations, the composite score from the most frequently reported location was used instead of combining all locations, to better identify patterns within locations. Pearson correlation and intraclass correlation coefficients (ICC) of this score described reliability of reporting healthy and unhealthy eating patterns.

Owing to branching patterns, students did not necessarily answer every question — if they reported never eating breakfast, they were not asked further questions about breakfast. Questions with fewer than 20 responses (~35% of the sample) were excluded from analysis.

Convergent validity. Percent agreement was also used to compare participants’ reports of location, frequency (with a tolerance of +/− 1), and consumption at Time 1 to aggregated food record data captured over 5 days. After calculating the composite score described above, Pearson correlation coefficients and ICCs assessed validity of reporting of healthy and unhealthy eating patterns. Only participants with at least 3 days of food records were included in analyses. Days of data were converted into a proportion of a full school week, then condensed into categories to match the survey (eg, 0.8–1.0 = Always).

Results

For the validity and reliability study, 87 students were recruited and 65 were screened for eligibility. Sixty-one (93.8%) consented to participate and completed PEA-PODS at Time 1; 58 (95.1%) completed Time 2 and were included in the reliability analysis, and 56 (91.8%) had 3 or more days of food record data and were included in the validity analysis (Figure).

Of 58 participants who completed PEA-PODS at both time points, 20 were in grade 3, 20 were in grade 6, and 18 were in grade 9. Participants were 53% male, 79% white or Caucasian, 2% black or African American, 2% Hispanic or Latino, 7% Asian, and 5% associated with more than 1 race. Participants represented 8 of 24 (33%) state school districts. Students completed the Time 1 and Time 2 surveys in an average of 50.7 (SD = 16.2) and 46.1 (SD = 16.5) minutes, respectively.

PEA

Test-retest reliability

The original survey contained 47 items. After preliminary analysis of the reliability data, 13 items were removed and 6 were added to complete subscales or to balance a diet or PA construct or both (eg, add “I see signs or posters with pictures of healthy food” to balance “I see signs or posters showing ways to be physically active”). Additionally, some items were reworded slightly to match other items within subscales (eg, “I see teachers at my school” changed to “Teachers at my school”). Data were re-analyzed to generate the final results, and 40 items within 4 subscales were included in the final survey: 1) Perceptions of School PA and Nutrition Policies and Practices; 2) Perceptions of Teacher and Classroom Policies and Practices; 3) Perceptions of Cafeteria Policies and Practices; and 4) Perceptions of Recess Policies and Practices. Table 1 includes survey items, responses, item-by-item agreement, and Cronbach α’s and test-retest reliability for the first 2 subscales. Because of the addition of new questions and a skip pattern that reduced the sample size for 2 items, scale reliability could not be calculated for the cafeteria or recess subscales.

Overall, percent agreement was acceptable or good for all items (Table 1). Both the School PA and Nutrition Wellness Policies and Practices and the Perception of Teacher and Classroom Wellness Policies and Practices subscales had a high Cronbach α (0.78 and 0.79, respectively) and good test-retest reliability (Pearson r = 0.84, P < 0.001; r = 0.80, P < 0.001, respectively).

PODS

Test-retest reliability

Table 2 describes agreement findings for PODS. At Time 1, most participants (n = 51; 88%) reported usually getting their breakfast from home, compared with 4% (n = 2) from school and 4% (n = 2) from more than 1 location. Usual lunch locations included home only (n = 33; 57%), school only (n = 15; 26%), alternating between home and school (n = 6; 10%), and alternating between home and a restaurant or store (n = 1; 2%). Percent agreements for breakfast and lunch location were good (86% and 81%). At Time 1, 91% (n = 53) of the sample reported eating breakfast all or most days of a usual school week, and 91% reported usually eating lunch all days. For frequency, percent agreement was good (81%) for breakfast and excellent (91%) for lunch.

Across locations, agreement in reporting of consumption of foods within MyPlate categories ranged from 74% (Fruits) to 93% (Beverages) for breakfast, and from 76% (Vegetables) to 95% (Beverages) for lunch. Average overall healthy composite scores at Time 1 were 14.6/32 (SD = 5.3) for breakfast and 18.5/32 (SD = 3.9) for lunch (Table 3). Pearson r for breakfast and lunch composite scores were 0.65 and 0.75, respectively. ICCs were 0.66 and 0.73. Pearson correlations and ICCs were significant (P < 0.01) and acceptable based on previous literature on adolescent diet indices (18,20–22).

Convergent validity

When comparing Time 1 with the aggregated food records, percent agreements were acceptable, good, or excellent for most location and frequency variables (Table 1). For most MyPlate categories, consumption was similar between the Time 1 survey and the food records for both breakfast and lunch, with higher agreement shown for lunch variables (Table 2). Agreement ranged from 68% to 91% for breakfast, and 73% to 88% for lunch.

Average overall healthy composite scores for the aggregated food record were 13.1/32 (SD = 4.9) for breakfast and 17.5/32 (SD = 4.7) for lunch. Pearson correlation coefficients between Time 1 survey and food records were 0.49 for breakfast (P < 0.01) and 0.34 (P < 0.05) for lunch. ICCs were similar. Both were significant (P < 0.01) and acceptable.

Discussion

As school-level LWP implementation continues to be a recommended strategy to prevent childhood obesity, it is critical to develop robust, comprehensive evaluation instruments to assess implementation and impact (2). This includes the perception of LWP implementation by students and other key stakeholders in addition to student impact measures (ie, weight status, physical activity, diet). This study fills critical gaps in available evaluation tools by developing PEA-PODS, a novel, reliable survey assessing students’ perceptions of the school environment, as well as a feasible, reliable, and valid method to assess students’ usual dietary patterns at school.

PEA represents a novel method to assess students’ perceptions of schools’ health-promoting environment. Students are influenced by their peers, teachers, and structural environments of their schools (2,3,11); thus, understanding these perceptions is critical to explain health-promoting behaviors. Further, students are key stakeholders in LWP implementation as they uniquely understand their school’s environment, and sustainability depends on their knowledge, acceptance, and identification of gaps and opportunities (23,24). Qualitative studies have reported on student stakeholders’ perceptions of various aspects of the social and structural “health-promoting” environments of their school (10,11,13,25). PEA, which is easy to administer and reliable, builds on these studies by allowing for assessment on a larger scale. PEA also has broad generalizability, as it inquires about components of the school environment that are tied to federal policy requirements (5), and was developed with input from school wellness researchers nationwide. Use of this tool as part of a comprehensive evaluation can provide support for LWPs and other efforts to promote environmental changes in schools.

PODS has several unique strengths, in addition to demonstrating similar reliability and validity compared with existing dietary questionnaires administered during the school day (14,26,27). First, it uses MyPlate, a recognizable, focused framework, to help students categorize foods. The use of these categories within meal-specific prompts, which have been shown to improve self-report accuracy, demonstrates the potential of PODS to reduce known limitations of self-report dietary assessment tools for youth (14,15). Second, unlike previous tools, PODS can assess both inter- and intra-individual differences in types of food consumed by location of origin (eg, school, home, store on the way to school), which can provide information on healthfulness of foods offered at school compared with those brought from home. Previous studies using observational “lunchbox audits” have shown that schools meals are more nutritious than those brought from home. PODS provides a method to understand this on broader scale (28,29). Additionally, it can provide information about what is consumed within consistent food categories. Together, findings would provide support for school meal policies, increase student and parent buy-in for eating meals provided at school, and align with the USDA’s goal to ensure access to safe and balanced meals for all children by increasing school meals participation (30). PODS was also reliable and valid in detecting dietary patterns, as determined by a simple composite score. Finally, PEA-PODS was validated for a broad age range (grades 3–9) including adolescents, a population that is often excluded from dietary instrument validation studies (14,15).

Limitations

Several limitations should be considered when interpreting study findings. Because we added or reworded 18 items in PEA, further psychometric testing is warranted. PODS, similar to other food frequency questionnaires, is potentially prone to self-report errors; however, the reliability and validity of PODS were acceptable compared with other questionnaires, and both PODS and food records had a low participant burden, which is critical for school-based studies. Although PODS was valid and reliable in detecting dietary patterns using a simple composite score, future studies should consider a more sophisticated system and larger sample to identify dietary patterns (eg, factor analysis). Additionally, as PEA-PODS does not account for the influence of parents or the home environment on student perceptions and diet, it should be administered in conjunction with parent surveys or other methods to understand the home environment.

Findings should also be considered within the small, homogenous sample. As our sample size limited analysis of variables that were not answered by at least 50% of the sample, we were unable to further investigate items within MyPlate categories. Further, we could not compare foods accessed from different locations because of insufficient variability. Finally, although our sample included a broad age range and representation from one-third of state school districts, it lacked racial and ethnic diversity.

Conclusion

This study developed PEA-PODS, 2 distinct yet complementary surveys for examining student perceptions of the school environment and dietary patterns in school, filling a significant gap in the school wellness literature. Each survey may be administered independently. PEA-PODS was found to be reliable, with the dietary component (PODS) also demonstrating validity. Given the focus on LWPs following a recent final rule (6), PEA-PODS is timely and may be used to examine implementation and impact of LWPs in addition to other school health promotion initiatives.

Acknowledgments

Support for this research was provided by the Mid-Atlantic Nutritional Obesity Research Center (NORC) through SEED funding and the Summer Program in Obesity, Diabetes, and Nutrition Research Training (SPORT; National Institutes of Health Grant ID: T35DK095737); the United States Department of Agriculture AFRI Childhood Obesity Grant (ID: 2016-68001-24927); and the National Institute of Diabetes and Digestive and Kidney Diseases (Grant ID: F32DK115146). No copyrighted material or surveys/instruments/tools were used. The authors would like to thank our nationwide panel of school wellness experts for providing their input, as well the study participants.

Author Information

Corresponding Author: Hannah Lane PhD, MPH, University of Maryland School of Medicine, Department of Pediatrics, Division of Growth and Nutrition, 737 W Lombard St, Room 169C, Baltimore, MD 21201. Telephone: 410-706-2136. Email: hlane@som.umaryland.edu.

Author Affiliations: 1University of Maryland School of Medicine, Department of Pediatrics, Division of Growth and Nutrition. 2Creighton University School of Medicine. 3Boise State University, Initiative for Healthy Schools, College of Education. 4University of Maryland School of Medicine, Department of Family and Community Medicine, Center for Integrative Medicine.

References

- Institute of Medicine Committee on Accelerating Progress in Obesity Prevention. Accelerating progress in obesity prevention: solving the weight of the nation. Washington (DC): Institute of Medicine; 2012.

- Story M, Nanney MS, Schwartz MB. Schools and obesity prevention: creating school environments and policies to promote healthy eating and physical activity. Milbank Q 2009;87(1):71–100.CrossRef PubMed

- Terry-McElrath YM, O’Malley PM, Delva J, Johnston LD. The school food environment and student body mass index and food consumption: 2004 to 2007 national data. J Adolesc Health 2009;45(3 Suppl):S45–56. CrossRef PubMed

- Cvjetan B, Utter J, Robinson E, Denny S. The social environment of schools and adolescent nutrition: associations between the school nutrition climate and adolescents’ eating behaviors and body mass index. J Sch Health 2014;84(10):677–82. CrossRef PubMed

- Healthy, Hunger-Free Kids Act of 2010. Pub Law No 111-296, 124 Stat 3183. https://www.gpo.gov/fdsys/pkg/PLAW-111publ296/pdf/PLAW-111publ296.pdf. Accessed May 2, 2018.

- Food and Nutrition Service, USDA. Local school wellness policy implementation under the Healthy, Hunger-Free Kids Act of 2010. Final rule. Fed Regist 2016;81(146):50151–70. PubMed

- Hager ER, Rubio DS, Eidel GS, Penniston ES, Lopes M, Saksvig BI, et al. Implementation of local wellness policies in schools: role of school systems, school health councils, and health disparities. J Sch Health 2016;86(10):742–50. CrossRef PubMed

- McGraw SA, Sellers D, Stone E, Resnicow KA, Kuester S, Fridinger F, et al. Measuring implementation of school programs and policies to promote healthy eating and physical activity among youth. Prev Med 2000;31(2):S86–97. CrossRef

- Turner L, Sandoval A, Chaloupka FJ. Bridging the Gap’s Food and Fitness Elementary School Survey: technical report on survey development, sampling, and methodology. Chicago (IL): Bridging the Gap, Health Policy Center, Institute for Health Research and Policy, University of Illinois at Chicago; 2015. http://www.bridgingthegapresearch.org/_asset/34zbxw/BTG_Food_Fitness_ES_survey_methodology_Apr_2015.pdf. Accessed May 4, 2018.

- Chatterjee A, Daftary G, Campbell M, Gatison L, Day L, Ramsey K, et al. “Can’t we just have some Sazón?” Student, family, and staff perspectives on a new school food program at a Boston high school. J Sch Health 2016;86(4):273–80. CrossRef PubMed

- Morton KL, Atkin AJ, Corder K, Suhrcke M, van Sluijs EM. The school environment and adolescent physical activity and sedentary behaviour: a mixed-studies systematic review. Obes Rev 2016;17(2):142–58. CrossRef PubMed

- Wang MT, Holcombe R. Adolescents’ perceptions of school environment, engagement, and academic achievement in middle school. Am Educ Res J 2010;47(3):633–62. CrossRef

- Asada Y, Hughes AG, Read M, Schwartz MB, Chriqui JF. High school students’ recommendations to improve school food environments: insights from a critical stakeholder group. J Sch Health 2017;87(11):842–9. CrossRef PubMed

- Tugault-Lafleur CN, Black JL, Barr SI. A systematic review of methods to assess children’s diets in the school context. Adv Nutr 2017;8(1):63–79. CrossRef PubMed

- Sharman SJ, Skouteris H, Powell MB, Watson B. Factors related to the accuracy of self-reported dietary intake of children aged 6-12 years elicited with interviews: a systematic review. J Acad Nutr Diet 2016;116(1):76–114. CrossRef PubMed

- ChooseMyPlate.gov [Internet]. Washington (DC): United States Department of Agriculture. https://www.choosemyplate.gov/. Accessed June 2, 2017.

- Heise DR. Separating reliability and stability in test-retest correlation. Am Sociol Rev 1969;34(1):93–101. CrossRef

- Schröder H, Benitez Arciniega A, Soler C, Covas MI, Baena-Díez JM, Marrugat J; REGICOR investigators; HERMES investigators. Validity of two short screeners for diet quality in time-limited settings. Public Health Nutr 2012;15(4):618–26. CrossRef PubMed

- US Department of Health and Human Services and US Department of Agriculture. 2015–2020 dietary guidelines for Americans. 8th Ed. http://health.gov/dietaryguidelines/2015/guidelines/. Accessed April 23, 2018.

- Benítez-Arciniega AA, Mendez MA, Baena-Díez JM, Rovira Martori MA, Soler C, Marrugat J, et al. Concurrent and construct validity of Mediterranean diet scores as assessed by an FFQ. Public Health Nutr 2011;14(11):2015–21. CrossRef PubMed

- Koo TK, Li MY. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J Chiropr Med 2016;15(2):155–63. J Chiropr Med CrossRef PubMed

- Wong JE, Parnell WR, Howe AS, Black KE, Skidmore PM. Development and validation of a food-based diet quality index for New Zealand adolescents. BMC Public Health 2013;13(1):562.CrossRef PubMed

- Hoelscher DM, Moag-Stahlberg A, Ellis K, Vandewater EA, Malkani R. Evaluation of a student participatory, low-intensity program to improve school wellness environment and students’ eating and activity behaviors. Int J Behav Nutr Phys Act 2016;13(1):59. CrossRef PubMed

- Jomaa LH, McDonnell E, Weirich E, Hartman T, Jensen L, Probart C. Student involvement in wellness policies: a study of Pennsylvania local education agencies. J Nutr Educ Behav 2010;42(6):372–9. CrossRef PubMed

- MacLellan D, Holland A, Taylor J, McKenna M, Hernandez K. Implementing school nutrition policy: student and parent perspectives. Can J Diet Pract Res 2010;71(4):172–7. CrossRefPubMed

- Penkilo M, George GC, Hoelscher DM. Reproducibility of the School-Based Nutrition Monitoring Questionnaire among fourth-grade students in Texas. J Nutr Educ Behav 2008;40(1):20–7.CrossRef PubMed

- Paxton A, Baxter SD, Fleming P, Ammerman A. Validation of the school lunch recall questionnaire to capture school lunch intake of third- to fifth-grade students. J Am Diet Assoc 2011;111(3):419–24. CrossRef PubMed

- Farris AR, Misyak S, Duffey KJ, Davis GC, Hosig K, Atzaba-Poria N, et al. Nutritional comparison of packed and school lunches in pre-kindergarten and kindergarten children following the implementation of the 2012-2013 National School Lunch Program standards. J Nutr Educ Behav 2014;46(6):621–6. CrossRef PubMed

- Stevens L, Nicholas J, Wood L, Nelson M. School lunches v. packed lunches: a comparison of secondary schools in England following the introduction of compulsory school food standards. Public Health Nutr 2013;16(6):1037–42. CrossRef PubMed

- United States Department of Agriculture. Strategic Plan 2014–2018: Strategic Goal 4, Ensure that all of America’s children have access to safe, nutritious, and balanced meals. https://www.usda.gov/sites/default/files/documents/usda-strategic-plan-fy-2014-2018.pdf. Accessed November 13, 2017.

.png)

No hay comentarios:

Publicar un comentario