Developing a Web-Based Cost Assessment Tool for Colorectal Cancer Screening Programs

ORIGINAL RESEARCH — Volume 16 — May 2, 2019

This article is part of the CDC Colorectal Cancer Control Program collection.

Sonja Hoover, MPP1; Sujha Subramanian, PhD1; Florence Tangka, PhD, MS2 (View author affiliations)

Suggested citation for this article: Hoover S, Subramanian S, Tangka F. Developing a Web-Based Cost Assessment Tool for Colorectal Cancer Screening Programs. Prev Chronic Dis 2019;16:180336. DOI: http://dx.doi.org/10.5888/pcd16.180336.

PEER REVIEWED

On This Page

Summary

What is already known on this topic?

Centers for Disease Control and Prevention and RTI International designed a web-based cost assessment tool (CAT) to collect cost and resource data. The design of the CAT was based on published methods of collecting cost data for program evaluation.

What is added by this report?

We describe the development of the web-based CAT, evaluate the quality of the data obtained, and discuss lessons learned. We found that grantees were successfully able to collect and report cost data across years by using the web-based CAT.

What are the implications for public health practice?

Data on activity-based expenditures and funding sources, collected using the web-based CAT, are essential in planning for the allocation of limited health care resources.

Abstract

Introduction

We developed a web-based cost assessment tool (CAT) to collect cost data as an improvement from a desktop instrument to perform economic evaluations of the Centers for Disease Control and Prevention’s (CDC’s) Colorectal Cancer Control Program (CRCCP) grantees. We describe the development of the web-based CAT, evaluate the quality of the data obtained, and discuss lessons learned.

Methods

We developed and refined a web-based CAT to collect 5 years (2009–2014) of cost data from 29 CRCCP grantees. We analyzed funding distribution; costs by budget categories; distribution of costs related to screening promotion, screening provision, and overarching activities; and reporting of screenings for grantees that received funding from non-CDC sources compared with those grantees that did not.

Results

CDC provided 85.6% of the resources for the CRCCP, with smaller amounts from in-kind contributions (7.8%), and funding from other sources (6.6%) (eg, state funding). Grantees allocated, on average, 95% of their expenditures to specific program activities and 5% to other activities. Some non-CDC funds were used to provide screening tests to additional people, and these additional screens were captured in the CAT.

Conclusion

A web-based tool can be successfully used to collect cost data on expenditures associated with CRCCP activities. Areas for future refinement include how to collect and allocate dollars from other sources in addition to CDC dollars.

Introduction

Colorectal cancer (CRC) screening can detect early-stage CRC and adenomatous polyps (1,2). However, CRC screening remains low; only 67.3% of adults aged 50 to 75 years in the United States received CRC screening that was consistent with US Preventive Services Task Force recommendations (3). To explore the feasibility of a CRC screening program for the underserved US population, the Centers for Disease Control and Prevention (CDC) established the Colorectal Cancer Screening Demonstration Program (Demo), conducted from 2005 through 2009. In 2009, CDC modified and expanded efforts to promote and provide CRC screening to 29 states and tribal organizations through the Colorectal Cancer Control Program (Program 1), which was conducted from 2009 through 2014) (4).

CDC and RTI International conducted economic evaluations as part of the Demo and Program 1. To help improve cost evaluation of CRC screening, we developed a cost assessment tool (CAT) to collect cost data and to perform economic evaluations. Cost assessments allow program planners and policy makers to determine optimal allocation of limited health care resources, identify the most efficient approach to implementing screening programs, and assess annual budget implications (5). However, cost assessment is a challenge for CRC programs because funds may come from many sources, different tests may be used (eg, colonoscopy vs stool tests), grantees may choose different screening promotion activities, and grantees may have different relationships with state and local public health organizations or with contractors. We focus on the CAT for Program 1 and describe lessons learned from designing and implementing the CAT to improve future data collection efforts.

Methods

The design of the web-based CAT for Program 1 (OMB no. 0920–0745) was based on published methods of collecting cost data for program evaluation (5–14). We collected data from a programmatic perspective on all funding sources of the grantees, including federal, nonfederal (eg, state or national organizations), and in-kind. We then asked grantees to collect cost data on activities relevant to the program in 5 budget categories: labor; contracts, materials, and supplies; screening and diagnostic services for each screening test provided; consultants; and administration. In the Program 1 CAT, all budget categories were allocated to specific program activities. We asked grantees to provide costs on screening promotion and provision activities. We also had a category of overarching activities, which included activities to support both screening promotion and provision activities, such as program management and quality assurance (Appendix).

Data collection procedures

For the Demo, we initially collected all resource use data via a Microsoft Excel-based tool. However, in 2009 we piloted a web-based tool. The web-based tool allowed us to embed data checks within the Program 1 CAT; therefore, fewer mistakes would be made during data entry and the quality of the data received would be improved. Examples of the embedded checks included asking grantees to allocate at least 95% of the total amount of annual funding to specific program activities in their reporting. By using an algorithm indicating that total allocation to spending activities was equal to or greater than 95% of total funding, the grantee could submit the CAT; if total allocation was less than 95%, the grantee needed to revise inputs. Grantees also had to confirm that 100% of staff time spent was allocated to specific activities and that the amount of funding received and the amount of carryover funding from previous years were accurate. If any of these checks failed, the grantee would need to review and revise inputs before submitting. Because of the ease of use of the web-based tool and the success of embedded checks, we implemented the web-based tool for Program 1.

In the Demo version of the CAT, we had an overall “other” category for activities that were not easily placed in existing categories. The activities that were placed in this “other” category were activities where grantees received lump-sum amounts that could not be easily divided among existing activities, or activities that were not included in the existing list. In the Program 1 web-based CAT, we added 2 “other” categories: an “other” category specifically for screening promotion activities, and an “other” category specifically for screening provision activities. In analyzing the second year of Program 1 CAT data, we found commonalities in activities that were included in the “other” categories, leading us to add more activities to the CAT, including patient navigation for both screening promotion and screening provision components and mass media for screening promotion activities.

In addition to collecting Program 1 cost data, we collected data on the number of screenings conducted by the grantee. We asked grantees to report on total number of individuals screened, screening tests performed by test type, follow-up colonoscopies, adenomatous polyps/lesions detected, and cancers detected. We also asked grantees to report total number of people previously diagnosed with CRC who were undergoing follow-up surveillance for recurrence or development of CRC and total number of people enrolled in insurance programs. We used this data to supplement the Colorectal Cancer Clinical Data Elements (CCDEs) that Program 1 grantees provided CDC. While the CCDEs collected data only on screenings funded by CDC, the Program 1 CAT collected the same information for all screenings facilitated by the grantee regardless of the source of funding.

To maintain systematic and standardized data collection, we provided all grantees with a data user’s guide and provided technical assistance via teleconferences and email. We hosted webinars about how to collect and input data using the Program 1 web-based CAT. The information in the Program 1 CAT was collected retrospectively; however, to improve the accuracy of the data, grantees were encouraged to track and log information required prospectively when feasible. Cost data were collected and analyzed on an annual basis for 5 years (2009–2014). In the first year of Program 1, we collected data from 26 grantees; in years 2 through 5, we collected data from 29 grantees annually.

Data quality assessment and analysis

On completion of the annual submission of the Program 1 CAT, we conducted data quality checks. We confirmed that data were entered into each of the broad categories of personnel, contracts, screening provision, and administration/overhead. If no costs were reported in any of these categories, we followed up with grantees to understand why. When funding amounts were reported for screening provision, we verified that screening numbers were also provided in the tool (or to CDC in year 5). Each year, before releasing the Program 1 annual CAT summary data to grantees, CDC also compared the data reported in the CAT with information in the fiscal database on approved, expended, and carryover funds. We generally found high levels of concordance.

To analyze the Program 1 CAT, we calculated staff cost per activity by using salary information and hours spent by staff members on each activity. We also calculated cost of contracts by activity and prepared a summary of cost data for each submission for each grantee’s review and approval. The summary provided grantees with information on the labor and nonlabor cost per activity and costs by budget category and in-kind contributions.

We performed 4 different analyses. First, we examined the distribution of funding from CDC, in-kind, and other sources. This evaluation of funding was essential to identify the extent to which assessments based on CDC funding alone would provide valid results and whether all funding sources needed to be considered. Second, we analyzed the cost by budget categories because certain types of data, such as contracts, tend to be more difficult to allocate to specific activities. Third, we examined the distribution of expenditures for screening promotion activities, screening provision activities, and overarching activities for each year of the program. On the basis of qualitative feedback from grantees, we anticipated that the overarching component would be high in year 1 because a large proportion of resources was initially allocated to planning activities; similarly, screening provision costs would be low because of the contracts that needed to be executed before initiating screenings of eligible individuals. Fourth, we evaluated the percentage of total expenditures that was not allocated to specific activities by year (ie, reported as miscellaneous “other” activity) and summarized the “other” activity category across all program years. As a quality measure, we required that at least 95% of the total funding be allocated to specific budget categories.

We compared the number of people screened based on data from both the Program 1 CAT and from the CCDEs. For grantees reporting other funding sources in addition to CDC dollars and where the other sources were earmarked for screening provision, we anticipated higher reported numbers of people screened. To reduce the reporting burden, in year 5 we did not require grantees to report the number of people screened if they received only CDC funding. We asked only grantees who received funds from non-CDC sources to report screens in the Program 1 CAT and used the screens reported in the CCDEs for grantees who reported only CDC funding (some of these grantees continued to voluntarily report the screens performed in the CAT). Because we could not verify additional funding for all grantees for each year in sufficient detail, we excluded selected grantees from this comparative analysis. We excluded grantees if they had not yet begun screening (generally during year 1) and if they reported extra funding inconsistently across years (eg, if a grantee reported extra funding in years 1, 3, and 4 but not in 2, they were excluded because screens sometimes overlap across years, and we could not ensure accuracy). Overall, we had a total of 45 program years with only CDC funds for screening, and 57 program-years with other funding for screening.

Results

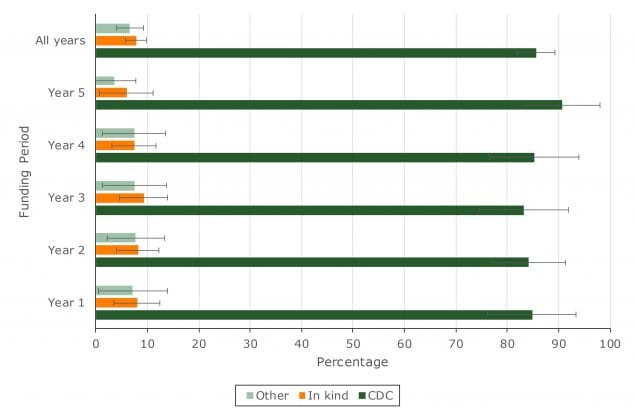

On average, most funding (85.6%) was from CDC (Figure 1). A smaller proportion of grantees indicated that they received in-kind contributions (7.8%) or funding from other sources (6.6%). The total amount from all sources equaled $148,016,341.

Figure 1.

Percentage distribution of funding sources, by year, Colorectal Cancer Control Program, 2009–2014. Error bars indicate confidence intervals. [A tabular version of this figure is also available.]

Percentage distribution of funding sources, by year, Colorectal Cancer Control Program, 2009–2014. Error bars indicate confidence intervals. [A tabular version of this figure is also available.]

In year 1, most costs (43.8%) were for overarching activities, which decreased to 37.2% by year 5 (Table 1). Screening promotion activities costs accounted for one-third of cost in year 1 and decreased to 27.5% by year 5. Screening provision activities comprised the smallest percentage of total costs in year 1 and ranged from 34% to 39% for years 2 to 5. The total amount by year ranged from $22,612,125 in year 1 to $33,037,756 in year 2.

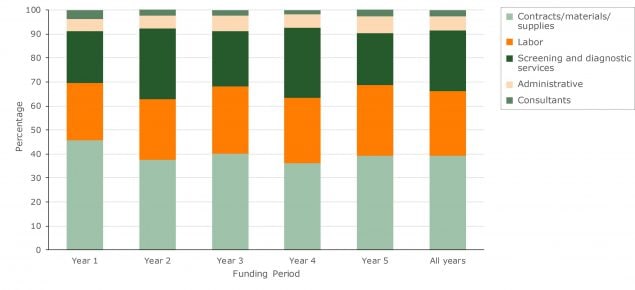

The largest total cost category in all years was contracts, materials, and supplies (Figure 2). This category was intended primarily for nonclinical services and averaged 39.4% across the years. Contracts generally comprised the largest part of these costs, while materials and supplies accounted for a smaller portion. The lowest costs were in the consultants category: grantees reported using less than 4% of their funding for consultants in any year.

Figure 2.

Percentage distribution of total cost by budget category, Colorectal Cancer Control Program, 2009–2014. [A tabular version of this figure is also available.]

Percentage distribution of total cost by budget category, Colorectal Cancer Control Program, 2009–2014. [A tabular version of this figure is also available.]

Overall, grantees were able to allocate more than 95% of their costs to specific program activities. Across years, grantees were unable to allocate approximately 2.4% of costs among promotion activities, 0.8% of costs among provision activities, and 1.8% of costs among other activities (Table 2).

Grantees who received funding from other sources reported higher average screening numbers in the Program 1 CAT compared with the CCDEs (Table 3). Across all years, grantees with additional funding reported an average absolute difference of 976 individuals screened compared with what was reported in the CCDEs. Grantees that did not report additional funding reported similar screening numbers as in the Program 1 CAT and the CCDEs with an average absolute difference across all years of 6.

Discussion

We described how we developed and used a standardized cost data collection instrument to support an economic evaluation of grantees participating in Program 1. We collected data from grantees annually for 5 years. We found that, in addition to CDC, in-kind cost contributions and funding from other sources were important sources of assistance to the programs, although not all grantees indicated that they were recipients of additional contributions. Among those who did, many did not receive or report additional contributions consistently. This may account for the slight variation in distribution of funding sources across years, particularly in the last year of the program. To collect this data more accurately in the future, grantees need to be provided guidance on how to collect this information.

Spending for overarching activities, those that supported both screening promotion and screening provision activities, were a significant portion of grantees’ expenditures, particularly in the early years. This was not surprising because programs were in their start-up phases and had not yet begun in earnest to promote or provide CRC screening. Grantees needed time, for example, to hire their staff and form partnerships to make the programs viable. As the programs were implemented, the proportion of funding used for these overarching activities generally decreased, as expected.

All grantees reported cost data by budget category, and more than half of expenditures was allocated to labor and to screening and diagnostic services. On the basis of previous experience in using a cost tool (to collect resource use data for the National Breast and Cervical Cancer Early Detection Program), labor and clinical service costs are typically captured accurately (15). However, allocating contracts expenditures can be more difficult to accomplish systematically because contract funds are provided to partners who often do not report details on the activities performed to the grantees. Future studies can be designed to collect additional information in a consistent manner from partner organizations to increase the completeness and quality of the activity-based cost assignment.

We found that grantees were able to collect and assign most of their costs to activities conducted during the program years. All grantees were able to allocate 95% or more of their funding to specific activities in the Program 1 CAT. To achieve this detailed reporting, we found numerous processes critical. First, it was important to solicit grantee input in formulating the activity listing in the CAT. Although there was a focus on evidence-based interventions recommended in The Guide to Community Preventive Services by the Community Preventive Services Task Force (such as client reminders and small media), grantees also conducted screening promotion activities that were not evidence-based interventions (eg, patient navigation, professional training) that we ultimately included in the Program 1 CAT (16). Second, grantee input during the design of the web-based CAT was invaluable in terms of creating a user-friendly tool. The web-based CAT had embedded checks to ensure efficiency in collection of high-quality data, which included a 1-step review and finalization process before submission. Third, we had a dedicated staff person provide technical assistance to grantees via telephone or email. We also drafted a detailed user’s guide that contained definitions of activities and step-by-step instructions on how to enter data.

We found it was essential on the Program 1 CAT to solicit from grantees the number of people screened for CRC and the number of screens conducted. Nearly 15% of funding was from sources other than CDC, and much of this funding was allocated to screening provision. Grantees with additional funding sources were able to conduct more screens than grantees who did not report added funding. Had we not asked for this information, we would have substantially underestimated the number of people screened. With the complete screening information available in the CAT, we were able to perform a comprehensive assessment of the cost per screen to inform future program planning (15,17).

We encountered numerous limitations in analyzing cost data collected by grantees. Although we collected 5 years of data from grantees, the ultimate sample size was small for each period (26 grantees in year 1, 29 grantees in years 2–5). We attempted through trainings and the user’s guide to define the program activities for the grantees and designate how to allocate time. Although in most cases we were able to attribute most of the costs to program activities, some inconsistencies are likely in how grantees ultimately defined the activities. We also anticipated recall bias related to time inputs, although we tried to alleviate that through training and by encouraging tracking of data prospectively. Lastly, some grantees were unable to disaggregate contracts and other inputs into activities and could only report them as a lump sum. These were allocated into the “other” categories.

We provide details on the methods used to collect data on activity-based expenditures and funding sources. These data are necessary to plan for optimal use of resources, and the additional details in these data are advantageous compared with previously existing resources such as budget or funding information. Although the focus of the CAT is specifically on cost and resource collection for the Demo and for Program 1, the CAT can be customized. For example, the CAT has been used with success in estimating cost and resource use for both national and international cancer registries and for other cancer screening programs (18–26). On the basis of lessons learned from this 5-year data collection effort, the CAT was redesigned and tailored for each individual grantee for Program 2 (2015–2020), which is the next iteration of the Program.

Acknowledgments

The findings and conclusions in this manuscript are those of the authors and do not necessarily represent the official position of CDC.

Funding support for Dr Subramanian and Ms Hoover was provided by CDC (contract no. 200-2008-27958, task order 01, to RTI International). The provision of data by grantees was supported through funding under a cooperative agreement with CDC. No copyrighted material, surveys, instruments, or tools were used in this manuscript.

Author Information

Corresponding Author: Sonja Hoover, MPP, 307 Waverley Oaks Rd, Suite 101, Waltham, MA 02452. Telephone: 781-434-1722. E-mail: shoover@rti.org.

Author Affiliations: 1RTI International, Waltham, Massachusetts. 2Division of Cancer Prevention and Control, Centers for Disease Control and Prevention, Atlanta, Georgia.

References

- National Cancer Institute. Colorectal cancer prevention — patient version. Overview. Bethesda (MD): National Cancer Institute; 2015. https://www.cancer.gov/types/colorectal. Accessed January 24, 2017.

- Bibbins-Domingo K, Grossman DC, Curry SJ, Davidson KW, Epling JW Jr, García FAR, et al. Screening for colorectal cancer: US Preventive Services Task Force recommendation statement. JAMA 2016;315(23):2564–75. Erratum in: JAMA 2016;316(5):545; JAMA 2017;317(21):2239. CrossRef PubMed

- Centers for Disease Control and Prevention. Behavioral Risk Factor Surveillance System (BRFSS). http://www.cdc.gov/brfss/index.html. Accessed December 18, 2017.

- Centers for Disease Control and Prevention. Colorectal Cancer Control Program (CRCCP): about the program. https://www.cdc.gov/cancer/crccp/about.htm. Accessed October 4, 2018.

- Tangka FK, Subramanian S, Bapat B, Seeff LC, DeGroff A, Gardner J, et al. Cost of starting colorectal cancer screening programs: results from five federally funded demonstration programs. Prev Chronic Dis 2008;5(2):A47. PubMed

- Anderson DW, Bowland BJ, Cartwright WS, Bassin G. Service-level costing of drug abuse treatment. J Subst Abuse Treat 1998;15(3):201–11. CrossRef PubMed

- Drummond M, Schulpher M, Torrance G, O’Brien B, Stoddard GL. Methods for the economic evaluation of health care programmes (Oxford Medical Publications). Ammanford (GB): Oxford University Press; 2005.

- French MT, Dunlap LJ, Zarkin GA, McGeary KA, McLellan AT. A structured instrument for estimating the economic cost of drug abuse treatment. The Drug Abuse Treatment Cost Analysis Program (DATCAP). J Subst Abuse Treat 1997;14(5):445–55. CrossRef PubMed

- Salomé HJ, French MT, Miller M, McLellan AT. Estimating the client costs of addiction treatment: first findings from the client drug abuse treatment cost analysis program (Client DATCAP). Drug Alcohol Depend 2003;71(2):195–206. CrossRef PubMed

- Subramanian S, Tangka FK, Hoover S, Beebe MC, DeGroff A, Royalty J, et al. Costs of planning and implementing the CDC’s Colorectal Cancer Screening Demonstration Program. Cancer 2013;119(Suppl 15):2855–62. CrossRef PubMed

- Subramanian S, Tangka FK, Hoover S, Degroff A, Royalty J, Seeff LC. Clinical and programmatic costs of implementing colorectal cancer screening: evaluation of five programs. Eval Program Plann 2011;34(2):147–53. CrossRef PubMed

- Subramanian S, Tangka FKL, Hoover S, Royalty J, DeGroff A, Joseph D. Costs of colorectal cancer screening provision in CDC’s Colorectal Cancer Control Program: comparisons of colonoscopy and FOBT/FIT based screening. Eval Program Plann 2017;62:73–80. CrossRef PubMed

- Tangka FK, Subramanian S, Beebe MC, Hoover S, Royalty J, Seeff LC. Clinical costs of colorectal cancer screening in 5 federally funded demonstration programs. Cancer 2013;119(Suppl 15):2863–9. CrossRef PubMed

- Tangka FKL, Subramanian S, Hoover S, Royalty J, Joseph K, DeGroff A, et al. Costs of promoting cancer screening: evidence from CDC’s Colorectal Cancer Control Program (CRCCP). Eval Program Plann 2017;62:67–72. CrossRef PubMed

- Subramanian S, Ekwueme DU, Gardner JG, Trogdon J. Developing and testing a cost-assessment tool for cancer screening programs. Am J Prev Med 2009;37(3):242–7. CrossRef PubMed

- The Guide to Community Preventive Services. Cancer screening: multicomponent interventions — colorectal cancer. Atlanta (GA): The Guide to Community Preventive Services; 2016. https://www.thecommunityguide.org/findings/cancer-screening-multicomponent-interventions-colorectal-cancer. Accessed June 6, 2016.

- Hoover S, Subramanian S, Tangka F, Cole-Beebe M, Joseph D, DeGroff A. Resource requirements for colonoscopy versus fecal-based screening: findings from 5 years of the Colorectal Cancer Control Program. Prev Chronic Dis 2019;16:180338. CrossRef

- Subramanian S, Tangka F, Edwards P, Hoover S, Cole-Beebe M. Developing and testing a cost data collection instrument for noncommunicable disease registry planning. Cancer Epidemiol 2016;45(Suppl 1):S4–12. CrossRef PubMed

- de Vries E, Pardo C, Arias N, Bravo LE, Navarro E, Uribe C, et al. Estimating the cost of operating cancer registries: experience in Colombia. Cancer Epidemiol 2016;45(Suppl 1):S13–9. CrossRef PubMed

- Wabinga H, Subramanian S, Nambooze S, Amulen PM, Edwards P, Joseph R, et al. Uganda experience — using cost assessment of an established registry to project resources required to expand cancer registration. Cancer Epidemiol 2016;45(Suppl 1):S30–6. CrossRef PubMed

- Martelly TN, Rose AM, Subramanian S, Edwards P, Tangka FK, Saraiya M. Economic assessment of integrated cancer and cardiovascular registries: the Barbados experience. Cancer Epidemiol 2016;45(Suppl 1):S37–42. CrossRef PubMed

- Tangka FK, Subramanian S, Edwards P, Cole-Beebe M, Parkin DM, Bray F, et al. Resource requirements for cancer registration in areas with limited resources: analysis of cost data from four low- and middle-income countries. Cancer Epidemiol 2016;45(Suppl 1):S50–8. CrossRef PubMed

- Tangka FK, Subramanian S, Beebe MC, Weir HK, Trebino D, Babcock F, et al. Cost of operating central cancer registries and factors that affect cost: findings from an economic evaluation of Centers for Disease Control and Prevention National Program of Cancer Registries. J Public Health Manag Pract 2016;22(5):452–60. CrossRef PubMed

- Subramanian S, Tangka FK, Ekwueme DU, Trogdon J, Crouse W, Royalty J. Explaining variation across grantees in breast and cervical cancer screening proportions in the NBCCEDP. Cancer Causes Control 2015;26(5):689–95. Erratum in: Cancer Causes Control 2015;26(5):697. CrossRef PubMed

- Subramanian S, Ekwueme DU, Gardner JG, Bapat B, Kramer C. Identifying and controlling for program-level differences in comparative cost analysis: lessons from the economic evaluation of the National Breast and Cervical Cancer Early Detection Program. Eval Program Plann 2008;31(2):136–44. CrossRef PubMed

- Ekwueme DU, Gardner JG, Subramanian S, Tangka FK, Bapat B, Richardson LC. Cost analysis of the National Breast and Cervical Cancer Early Detection Program: selected states, 2003 to 2004. Cancer 2008;112(3):626–35. CrossRef PubMed

.png)

No hay comentarios:

Publicar un comentario