Linking Data From Health Surveys and Electronic Health Records: A Demonstration Project in Two Chicago Health Center Clinics

ORIGINAL RESEARCH — Volume 15 — January 18, 2018

Fikirte Wagaw, MPH1; Catherine A. Okoro, PhD2; Sunkyung Kim, PhD3; Jessica Park, MPH1; Fred Rachman, MD1 (View author affiliations)

Suggested citation for this article: Wagaw F, Okoro CA, Kim S, Park J, Rachman F. Linking Data From Health Surveys and Electronic Health Records: A Demonstration Project in Two Chicago Health Center Clinics. Prev Chronic Dis 2018;15:170085. DOI: http://dx.doi.org/10.5888/pcd15.170085.

PEER REVIEWED

Abstract

Introduction

Monitoring and understanding population health requires conducting health-related surveys and surveillance. The objective of our study was to assess whether data from self-administered surveys could be collected electronically from patients in urban, primary-care, safety-net clinics and subsequently linked and compared with the same patients’ electronic health records (EHRs).

Methods

Data from self-administered surveys were collected electronically from a convenience sample of 527 patients at 2 Chicago health centers from September through November, 2014. Survey data were linked to EHRs.

Results

A total of 251 (47.6%) patients who completed the survey consented to having their responses linked to their EHRs. Consenting participants were older, more likely to report fair or poor health, and took longer to complete the survey than those who did not consent. For 8 of 18 categorical variables, overall percentage of agreement between survey data and EHR data exceeded 80% (sex, race/ethnicity, pneumococcal vaccination, self-reported body mass index [BMI], diabetes, high blood pressure, medication for high blood pressure, and hyperlipidemia), and of these, the level of agreement was good or excellent (κ ≥0.64) except for pneumococcal vaccination (κ = 0.40) and hyperlipidemia (κ = 0.47). Of 7 continuous variables, agreement was substantial for age and weight (concordance coefficients ≥0.95); however, with the exception of calculated survey BMI and EHR–BMI (concordance coefficient = 0.88), all other continuous variables had poor agreement.

Conclusions

Self-administered and web-based surveys can be completed in urban, primary-care, safety-net clinics and linked to EHRs. Linking survey and EHR data can enhance public health surveillance by validating self-reported data, completing gaps in patient data, and extending sample sizes obtained through current methods. This approach will require promoting and sustaining patient involvement.

Introduction

Monitoring and understanding population health requires conducting health-related surveys and surveillance. The Behavioral Risk Factor Surveillance System (BRFSS), for example, is a state-based system of telephone surveys that collect data on health-risk behaviors, chronic conditions, use of preventive services, and health-related quality of life (HRQoL) of adults (1). BRFSS can be modified to assess emerging and urgent health issues and provides data on measures typically unrecorded in the clinical setting (eg, exercise, HRQoL, health attitudes, awareness, health knowledge) (2,3). Searching for new data sources is important, however, because population-based surveys can be costly and time-consuming and may produce biased results that are hard to generalize (1,4–12).

Expanded use of electronic health records (EHRs) — complete with appropriate protection of patient confidentiality — can help improve the design and delivery of public health interventions and clinical care; data in EHRs can be used to help find new causes of infectious disease and to address outbreaks by triggering public health alerts, providing recommendations to clinicians, and enhancing communications between public health practitioners and clinical organizations (11–13). Additionally, EHRs can help identify patients needing medical care, disease management, preventive health services, and behavioral counseling (2,3,14–17). EHRs can also help control rising health care costs by eliminating unnecessary tests, procedures, and prescriptions (17).

EHRs may help improve patient care and population health when linked to survey data and other information about health-related behavior, HRQoL, and details about working and living conditions (2,3,18). For people managing a chronic illness, for example, the EHR can validate responses, because survey answers can be linked to recorded clinical events. Likewise, behaviors (eg, exercise) recorded in a recent survey could trigger alerts and recommendations back through the EHR. Inclusion of patient-reported measures in EHRs can enhance patient-centered care, patient health, and capacity to conduct population-based research (2,3).

The objective of this study was to explore the feasibility of electronically collecting self-administered patient survey data in urban, primary-care, safety-net clinics and subsequently linking and comparing that data with patients’ EHR data.

Methods

Alliance of Chicago Community Health Services (Alliance; http://alliancechicago.org/) is a federal Health Center Controlled Network. Alliance approached 4 of its network health centers about project participation, selecting them for their large patient volume, diverse geographic locations, distinct and diverse patient populations, and history of participation in new initiatives. Although 3 health centers approved the project, only 1 was able to participate in the project’s timeframe. We implemented our study in 2 of that health center’s clinics, and it was approved by that clinic’s research review committee.

We recruited clinic patients aged 18 years or older by using fliers and announcements in waiting areas and in check-in procedures. Survey administrators used standardized scripts to summarize the survey’s goals for interested patients. Participants reviewed an electronic consent form and received a hard copy of the form; they provided separate informed consent for survey participation and for subsequent survey–EHR linkage. Each survey participant received a modest incentive (regardless of consent to EHR linkage). Survey administrators were available to assist patients throughout data collection.

From September 2014 through November 2014, a convenience sample of 527 patients completed the self-administered, web-based survey on various brands of electronic tablets, desktop computers, and cellular phones. Tablet data plans were purchased to minimize impact on health center resources and to minimize data connectivity issues.

Questions from the Illinois BRFSS (http://app.idph.state.il.us/brfss/) were used to collect information on patients’ sociodemographic characteristics, health behaviors, chronic conditions, receipt of preventive care services, and medical care. Questions related to chronic conditions were selected on the basis of their ability to be matched to data available in the EHR. Questions on medication use, laboratory findings, and blood pressure readings helped us compare data on self-reported chronic conditions with EHR content. The number of questions each participant was asked was determined by sex (eg, sex-specific preventive care services), age (eg, age-specific cancer screenings), and survey responses that determined question branching. The survey took an average of 20 to 30 minutes to complete and was hosted by using the Survey Analytics Online Survey Platform (Survey Analytics LLC).

Of 527 survey participants, 47% (n = 251) consented to have their survey responses linked to their EHR; 99% (n = 248) of these consenting patients had an EHR. At the end of the survey and EHR extraction, 2 de-identified analytic data sets were created: 1) a set that contained only the survey data of patients who did not consent to the EHR linkage and 2) a set that contained the survey and EHR data of patients who consented to EHR linkage.

When possible, differences in categorical variable construction between survey data and EHR data were resolved by collapsing the original categories to form a common metric. Continuous variables except for blood pressure were constructed similarly in the survey instrument and the EHR. Patients reporting that a health care professional said they had high blood pressure (HBP) were asked to enter their systolic and diastolic blood pressure. For the EHR abstraction, the last 3 systolic and diastolic blood pressure readings were taken, and the mean systolic and diastolic pressures were calculated. Self-reported weight and height were assessed using 2 survey questions: “About how much do you weigh without shoes?” and “About how tall are you without shoes?” Patients were classified as underweight (body mass index [BMI, kg/m2] <18.5), normal weight (BMI 18.5–<25), overweight (BMI 25–<30), or obese (BMI ≥30). Self-classified BMI was assessed with the survey question, “Would you classify your weight as low (underweight), normal weight, overweight, or obese?”

We calculated the distribution of the study population by survey duration, sociodemographic characteristics, and self-rated health status, overall and by consent to EHR linkage. For categorical variables, we used the χ2 test to assess significant differences between those who agreed to survey–EHR linkage and those who did not. For continuous variables, we used the t test to assess significant mean differences between the 2 patient groups. To assess concordance between survey data and EHR data, we examined 248 patients who consented to the EHR linkage and for whom an EHR record was found. For categorical variables, we applied Cohen’s (19) κ coefficient with 4 predefined agreement levels: excellent agreement (κ ≥0.9), good agreement (κ ≥0.6 to κ <0.9), fair agreement (κ ≥0.3 to κ <0.6), and poor agreement (κ <0.3). Because we observed some cases that may belong to the κ paradox (20), we also calculated overall agreement in percentage (= 100 × the number of concordant counts/the total sample size). For continuous variables, we applied Lin’s (21,22) concordance correlation coefficient (ρc) with 4 predefined agreement levels: almost perfect (ρc >0.99), substantial (ρc ≥0.95 to ρc ≤0.99), moderate (ρc ≥0.90 to ρc<0.95), and poor (ρc <0.90) (23). For all analyses, P < .05 was considered significant, and data were analyzed in SAS version 9.3 (SAS Institute, Inc).

Results

Participant ages ranged from 18 to 87 years (mean, 43.4 y; standard deviation [SD], 14.7 y) (Table 1). The sample was predominantly non-Hispanic black (90.4%), female (70.4%), never married or a member of an unmarried couple (61.2%), spoke English as their primary language (96.3%), and had Medicaid or Medicare as primary health insurance coverage (69.1%). More than 70% reported their health status as excellent, very good, or good, and 62.7% reported no disability. Most had annual household incomes less than $20,000, rented their primary residence, and had no children in the household.

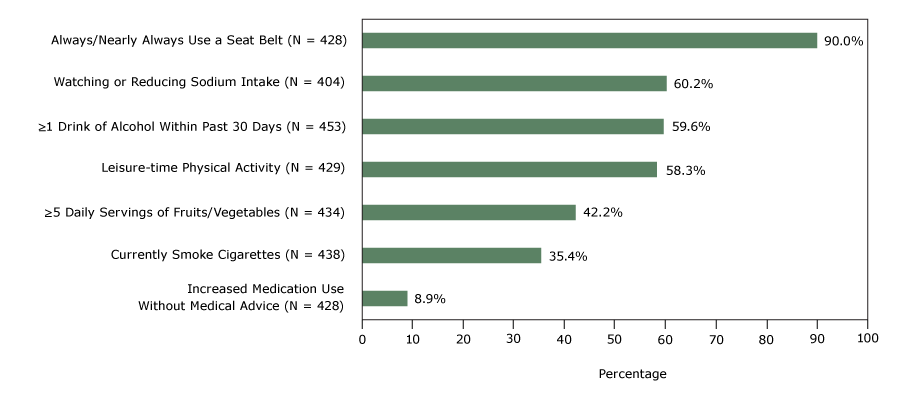

Seven health behaviors of the convenience sample were distributed as follows: always or nearly always wearing a seat belt (90.0%), watching or reducing sodium intake (60.2%), consuming one or more drinks of alcohol in the past 30 days (59.6%), engaging in leisure-time physical activity (58.3%), consuming 5 or more servings daily of fruits and vegetables (42.2%), currently smoking cigarettes (35.4%), and increasing medication use in the past 30 days without the advice of a health care professional (8.9%) (Figure).

Figure.

Self-reported health behaviors of a convenience sample, study linking self-reported survey data with electronic health record data, 2 Chicago health clinics, 2014. [A tabular version of this figure is also available.]

Self-reported health behaviors of a convenience sample, study linking self-reported survey data with electronic health record data, 2 Chicago health clinics, 2014. [A tabular version of this figure is also available.]

Compared with patients who did not agree to having their survey results linked to their EHRs, those who agreed were older (mean 45.4 y vs 41.5 y, P = .003), more likely to report fair or poor health (32% vs 24%, P = .03), and took longer to complete the electronic survey (27.8 minutes vs 21.3 minutes, P < .01) (Table 1).

Of the 18 categorical variables we examined, overall agreement between the survey and EHR data exceeded 80% for 8 variables (sex, race/ethnicity, pneumococcal vaccination, self-reported BMI, diabetes, HBP, medication for HBP, hyperlipidemia), and of these, the level of agreement was good or excellent (κ ≥ 0.64) except for pneumococcal vaccination (κ = 0.40) and hyperlipidemia (κ = 0.47) (Table 2). Race/ethnicity and diabetes had a percentage agreement above 91% but lower κ statistics values of 0.65 (95% confidence interval [CI], 0.45–0.85) and 0.76 (95% CI, 0.66–0.87), respectively. Self-classified BMI showed the lowest level of concordance (overall agreement = 19.5%, κ = 0.16).

Of the 7 continuous variables we examined, the agreement level between the survey and EHR data was substantial for age (= 0.95; 95% CI, 0.94–0.96) and weight (= 0.98; 95% CI, 0.97–0.98) (Table 3). With the exception of BMI (= 0.88; 95% CI, 0.84–0.91), all other continuous variables had poor agreement; diastolic blood pressure among patients who reported hypertension had the lowest agreement (= 0.28; 95% CI, 0.13–0.41).

Discussion

This study compared results from a self-administered web-based survey with de-identified patient data from EHRs in an urban primary-care setting. We found a satisfactory degree of concordance between survey data and EHR data for nonmodifiable demographic characteristics and for some health-related measures: diabetes, HBP, HBP medication, weight, and calculated categorical and continuous BMI. We found lower levels of concordance for modifiable sociodemographic characteristics, pneumococcal vaccination, hyperlipidemia, self-classified BMI, hemoglobin A1c among patients reporting diabetes, and blood pressure among patients reporting hypertension ― especially diastolic pressure. EHR data on self-reported health-risk behaviors were unavailable for comparison; data on tobacco use screening were available.

Fewer than half the surveyed patients gave EHR linkage consent; those consenting showed significant differences from those who did not. Similar to other researchers’ findings (24), those consenting were older and more likely to report fair or poor health. Unlike other research findings (24), however, we did not find significant differences by sex, employment status, or type of health insurance coverage. Further investigation into what factors may increase consent or enhance patient engagement could aid project sustainability and representativeness of the patient population.

Generally, our concordance findings were consistent with studies that have used similar methods (7,25,26). Our level of agreement was similar to previous research assessing data quality between ambulatory medical record data and patient survey data for diabetes and BMI, but we had a higher level of concordance for HBP, HBP medication, and hyperlipidemia and a lower level of concordance for lipid-lowering medication (26). Additionally, we had substantial agreement for weight and, in contrast, poor agreement for height. We also found good overall agreement for BMI based on self-reported height and weight (86%) but poor overall agreement for self-classified BMI (20%). Studies show people generally overestimate their height and underestimate their weight and BMI (6). This reporting bias varies, however, by the demographic characteristics of the study population (eg, sex, race, age). For example, men are more likely to exaggerate their height than women are. Our convenience sample was predominantly female, non-Hispanic black, and aged 45 to 64 years. Differences between self-report and direct measures may also be due to the respective population’s sociocultural perceptions of body weight and may be biased by social desirability (6). Our results demonstrate the need for direct measures that validate self-reported data, because patients were more likely to perceive themselves as in a lower BMI category than their calculated BMI category showed. Our results may also reflect a lack of awareness of their BMI and, consequently, greater risk of poor health outcomes. Further research is needed to fully understand and address this finding (eg, improved patient–provider communication, obesity screening and intervention). Because our results are not generalizable to the health center’s patient population or to other patient populations (convenience sample/apparent selection bias), interpretation should be done with care.

Survey and EHR data may have poor concordance for many reasons and may show where each data source can help improve the accuracy and completeness of patient and population data. When survey and EHR clinical measures are not concordant, EHR data tend to be more accurate than survey data because biases associated with self-report vary (5–7). For example, correctly remembering the date of one’s last tetanus shot or hemoglobin A1c test result is difficult. For modifiable sociodemographic characteristics, however, self-reported data are likely to be more accurate than EHR data, because busy health centers have few resources or incentives to update nonclinical data elements. Institutional incentives also may influence poor concordance, as when a sliding fee scale could encourage under-reporting of income or private health care coverage or when health insurance plans charge higher premiums to consumers who smoke (27).

Our study has several limitations. First, we used a convenience sample of patients from 2 Chicago health center clinics. This sample selection bias limits our ability to make inferences to the health center’s patient population across all its clinic sites and its comparability to other patient populations in the area; the sample was predominantly female, non-Hispanic black, unmarried, and low income; patients had public insurance coverage and were more likely to have a cellular telephone or an email address than a landline telephone. As a result, for public health surveillance, multiple data collection modes and data sources may be needed to effectively reach and ensure the representativeness of data for population subgroups. Moreover, public health professionals and policy makers must be aware of subpopulations that are unconnected to the health care system and whose members have limited health records or lack them entirely (4). Second, less than 50% of the patients surveyed consented to EHR linkages. Further analysis of the factors associated with consent, and which are amenable to modification, is needed to access the wealth of data available in EHRs. Third, analysis of the linked data found some variables with low prevalence that prevented further assessment of agreement. Fourth, some variables had good agreement but low κ scores, suggesting that agreement may have occurred by chance alone. Finally, neither data source may be considered a gold standard for all items measured. For example, survey data may have inherent biases, and EHR data and the data extraction process may have complexities that are not fully known or accounted for. Nevertheless, these limitations may change over time with meaningful use of EHRs, advancements in health information technologies, and emphasis on quality and patient-centered care as well as implementing new methods that integrate lifestyle measures into prescribed health care (eg, prescribed physical activity) (2–4,28).

These limitations notwithstanding, a symbiotic relationship exists between survey data and clinical data. Self-reported data are needed to augment clinical data for medical services (eg, immunizations, screenings, behavioral counseling), imaging and other diagnostics, and medications obtained outside of the patient’s health center (2,7). Self-reported measures, although subject to biases, are vital to providing a complete picture of patient health, because many health-related measures may not be in the EHR (eg, behaviors, HRQoL, health attitudes, awareness, knowledge) or up-to-date (eg, modifiable sociodemographic characteristics) (2,17,29,30). At the same time, EHRs can be used to validate self-reported clinical measures and facilitate the development of correction factors that can be applied to self-reported data in the absence of physical measurement, which is often costly or not possible (6). In unison, the 2 data sources have the potential to improve disease management, reduce costs, and enhance two-way data exchange between public health and clinical organizations.

As health systems and their information technologies continue to evolve, researchers should continue the search for high-quality patient health data. Doing so can help health practitioners, public health professionals, and policy makers successfully evaluate and reduce existing health disparities. Furthermore, public health policy and practice can be guided by data science methods (including predictive analytics) by using combined data sources. Population-based surveys, EHRs, and other data sources all have a role in providing a more complete picture of the health of all Americans, while improving their health and access to care. To this end, this project demonstrated the feasibility of computer-assisted collection of consumer survey data and matching it to EHR data. This approach can enhance health information from unique, often underrepresented populations with health disparities, increase efficiency and breadth of surveillance activities, and improve validity of objective measures. More research is needed to promote and sustain patient involvement in their health and health records, which is vital to the success and sustainability of this approach.

Acknowledgments

F.W. supervised the study. C.A.O. provided technical guidance, contributed to data analysis, and drafted and revised the paper. S.K. provided statistical guidance and contributed to data analysis. J.P. assisted with the study. F.R. conceived the study. All authors contributed to interpreting results and revisions of the manuscript. The authors thank Bruce Steiner, MS, Behavioral Risk Factor Surveillance System Coordinator, Illinois Department of Public Health (IDPH), for his suggestions; George Khalil, DrPH, for programming of the web-based survey instrument; and David Flegel, MS, technical writer, for his services. The authors also thank Mrs. Berneice Mills-Thomas and the staff at Near North Health Services Corporation for supporting the implementation of this project. We are also grateful for the work of the survey team lead by Diana Beasley, which included Elizabeth Adetoro, Anusha Balaji, Audrey Patterson, Jessica Park, Matthew Sakumoto, and Mary Kay Shaw. Funding for this project was received from IDPH (contract no. 52400015C). The findings and conclusions in this report are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention.

Author Information

Corresponding Author: Fikirte Wagaw, MPH, Chicago Department of Public Health, 333 S. State St, 2nd Floor, Chicago, IL 60604. Telephone: 312-747-9891. Email: fikirte.wagaw@cityofchicago.org.

Author Affiliations: 1Alliance of Chicago Community Health Services, Chicago, Illinois. 2Division of Population Health, Centers for Disease Control and Prevention, Atlanta, Georgia. 3Northrop Grumman, Atlanta, Georgia.

References

- Pierannunzi C, Hu SS, Balluz L. A systematic review of publications assessing reliability and validity of the Behavioral Risk Factor Surveillance System (BRFSS), 2004–2011. BMC Med Res Methodol 2013;13(1):49. CrossRef PubMed

- Glasgow RE, Kaplan RM, Ockene JK, Fisher EB, Emmons KM. Patient-reported measures of psychosocial issues and health behavior should be added to electronic health records. Health Aff (Millwood) 2012;31(3):497–504. CrossRef PubMed

- Krist AH, Phillips SM, Sabo RT, Balasubramanian BA, Heurtin-Roberts S, Ory MG, et al. ; MOHR Study Group. Adoption, reach, implementation, and maintenance of a behavioral and mental health assessment in primary care. Ann Fam Med 2014;12(6):525–33. CrossRef PubMed

- Crilly JF, Keefe RH, Volpe F. Use of electronic technologies to promote community and personal health for individuals unconnected to health care systems. Am J Public Health 2011;101(7):1163–7. CrossRef PubMed

- Cronin KA, Miglioretti DL, Krapcho M, Yu B, Geller BM, Carney PA, et al. Bias associated with self-report of prior screening mammography. Cancer Epidemiol Biomarkers Prev 2009;18(6):1699–705. CrossRef PubMed

- Connor Gorber S, Tremblay M, Moher D, Gorber B. A comparison of direct vs. self-report measures for assessing height, weight and body mass index: a systematic review. Obes Rev 2007;8(4):307–26. CrossRef PubMed

- Rolnick SJ, Parker ED, Nordin JD, Hedblom BD, Wei F, Kerby T, et al. Self-report compared to electronic medical record across eight adult vaccines: do results vary by demographic factors? Vaccine 2013;31(37):3928–35. CrossRef PubMed

- Khoury MJ, Lam TK, Ioannidis JP, Hartge P, Spitz MR, Buring JE, et al. Transforming epidemiology for 21st century medicine and public health. Cancer Epidemiol Biomarkers Prev 2013;22(4):508–16. CrossRef PubMed

- Gittelman S, Lange V, Gotway Crawford CA, Okoro CA, Lieb E, Dhingra SS, et al. A new source of data for public health surveillance: Facebook likes. J Med Internet Res 2015;17(4):e98.CrossRef PubMed

- Vogel J, Brown JS, Land T, Platt R, Klompas M. MDPHnet: secure, distributed sharing of electronic health record data for public health surveillance, evaluation, and planning. Am J Public Health 2014;104(12):2265–70. CrossRef PubMed

- Smolinski MS, Crawley AW, Baltrusaitis K, Chunara R, Olsen JM, Wójcik O, et al. Flu Near You: crowdsourced symptom reporting spanning 2 influenza seasons. Am J Public Health 2015;105(10):2124–30. CrossRef PubMed

- Smart Chicago Collaborative. Foodborne Chicago; 2017. http://www.smartchicagocollaborative.org/people/staff-consultants/. Accessed November 20, 2017.

- Lurio J, Morrison FP, Pichardo M, Berg R, Buck MD, Wu W, et al. Using electronic health record alerts to provide public health situational awareness to clinicians. J Am Med Inform Assoc 2010;17(2):217–9. CrossRef PubMed

- Moody-Thomas S, Nasuti L, Yi Y, Celestin MD Jr, Horswell R, Land TG. Effect of systems change and use of electronic health records on quit rates among tobacco users in a public hospital system. Am J Public Health 2015;105(S2, Suppl 2):e1–7. CrossRef PubMed

- Kern LM, Barrón Y, Dhopeshwarkar RV, Edwards A, Kaushal R; HITEC Investigators. Electronic health records and ambulatory quality of care. J Gen Intern Med 2013;28(4):496–503.CrossRef PubMed

- Murphy DR, Laxmisan A, Reis BA, Thomas EJ, Esquivel A, Forjuoh SN, et al. Electronic health record-based triggers to detect potential delays in cancer diagnosis. BMJ Qual Saf 2014;23(1):8–16. CrossRef PubMed

- Office of the National Coordinator for Health Information Technology (ONC), Department of Health and Human Services. 2014 Edition Release 2 Electronic Health Record (EHR) certification criteria and the ONC HIT Certification Program; regulatory flexibilities, improvements, and enhanced health information exchange. Final rule. Fed Regist 2014;79(176):54429–80. PubMed

- Gustafsson PE, San Sebastian M, Janlert U, Theorell T, Westerlund H, Hammarström A. Life-course accumulation of neighborhood disadvantage and allostatic load: empirical integration of three social determinants of health frameworks. Am J Public Health 2014;104(5):904–10. CrossRef PubMed

- Cohen J. A coefficient of agreement for nominal scales. Educ Psychol Meas 1960;20(1):37–46. CrossRef

- Cicchetti DV, Feinstein AR. High agreement but low kappa: II. Resolving the paradoxes. J Clin Epidemiol 1990;43(6):551–8. CrossRef PubMed

- Lin LI-K. A concordance correlation coefficient to evaluate reproducibility. Biometrics 1989;45(1):255–68. CrossRef PubMed

- Lin LI-K. A note on the concordance correlation coefficient. Biometrics 2000;56(1):324–5.

- McBride GB. A proposal for strength-of-agreement criteria for Lin’s Concordance Correlation Coefficient; 2005. NIWA Client Report: HAM2005-062. Hamilton (NZ): National Institute of Water and Atmospheric Research Ltd. https://www.medcalc.org/download/pdf/McBride2005.pdf. Accessed November 20, 2017.

- Hill EM, Turner EL, Martin RM, Donovan JL. “Let’s get the best quality research we can”: public awareness and acceptance of consent to use existing data in health research: a systematic review and qualitative study. BMC Med Res Methodol 2013;13(1):72. CrossRef PubMed

- Rodriguez HP, Glenn BA, Olmos TT, Krist AH, Shimada SL, Kessler R, et al. Real-world implementation and outcomes of health behavior and mental health assessment. J Am Board Fam Med 2014;27(3):356–66. CrossRef PubMed

- Tisnado DM, Adams JL, Liu H, Damberg CL, Chen WP, Hu FA, et al. What is the concordance between the medical record and patient self-report as data sources for ambulatory care? Med Care 2006;44(2):132–40. CrossRef PubMed

- Singleterry J, Jump Z, DiGiulio A, Babb S, Sneegas K, MacNeil A, et al. State Medicaid coverage for tobacco cessation treatments and barriers to coverage — United States, 2014–2015. MMWR Morb Mortal Wkly Rep 2015;64(42):1194–9. CrossRef PubMed

- Sallis R, Franklin B, Joy L, Ross R, Sabgir D, Stone J. Strategies for promoting physical activity in clinical practice. Prog Cardiovasc Dis 2015;57(4):375–86. CrossRef PubMed

- Krist AH, Glenn BA, Glasgow RE, Balasubramanian BA, Chambers DA, Fernandez ME, et al. ; MOHR Study Group. Designing a valid randomized pragmatic primary care implementation trial: the my own health report (MOHR) project. Implement Sci 2013;8(1):73. CrossRef PubMed

- Estabrooks PA, Boyle M, Emmons KM, Glasgow RE, Hesse BW, Kaplan RM, et al. Harmonized patient-reported data elements in the electronic health record: supporting meaningful use by primary care action on health behaviors and key psychosocial factors. J Am Med Inform Assoc 2012;19(4):575–82. CrossRef PubMed

.png)

No hay comentarios:

Publicar un comentario